Recent work in NLP has shown promising results in training models on large amounts of tasks to achieve better generalization. However, it is not well-understood how tasks are related, and how helpful training tasks can be chosen for a new task. In this work, we investigate whether knowing task relationships via pairwise task transfer improves choosing one or more source tasks that help to learn a new target task.

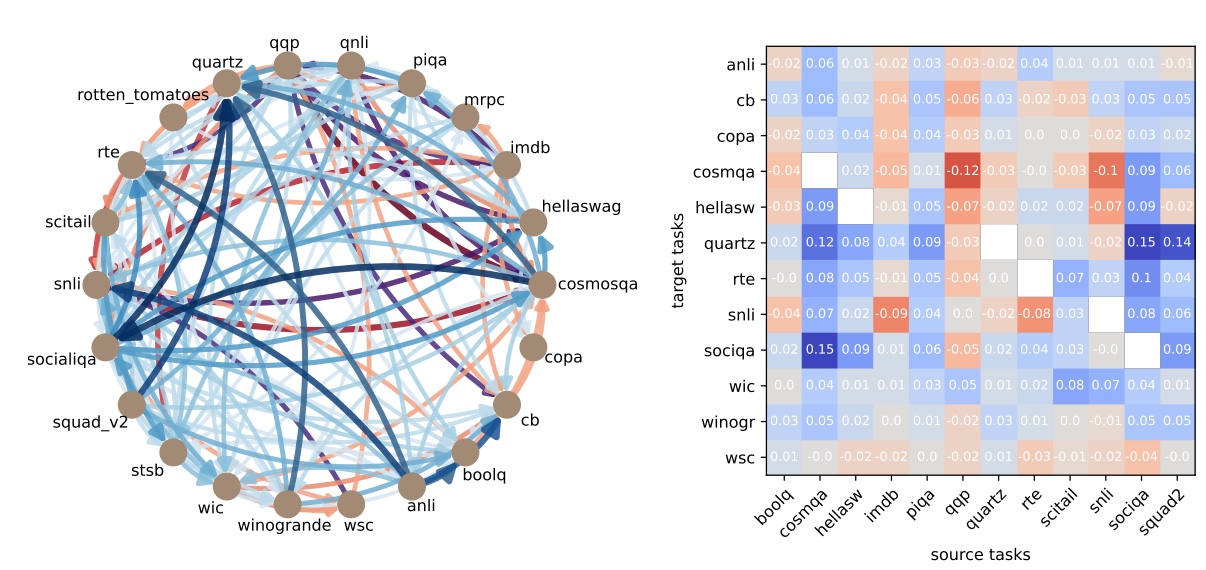

We provide TaskWeb, a large-scale benchmark of pairwise task transfers for 22 NLP tasks using three different model types, sizes, and adaptation methods, spanning about 25,000 experiments. Then, we design a new method TaskShop based on our analysis of TaskWeb. TaskShop uses TaskWeb to estimate the benefit of using a source task for learning a new target task, and to choose a subset of helpful training tasks for multi-task learning.

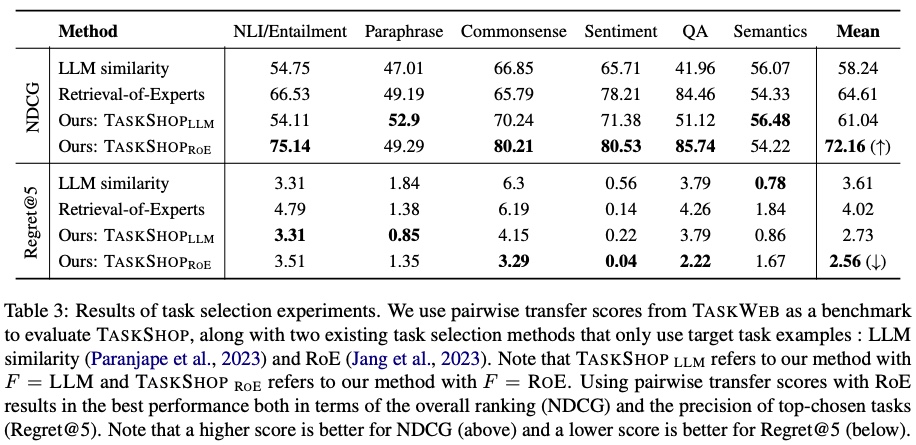

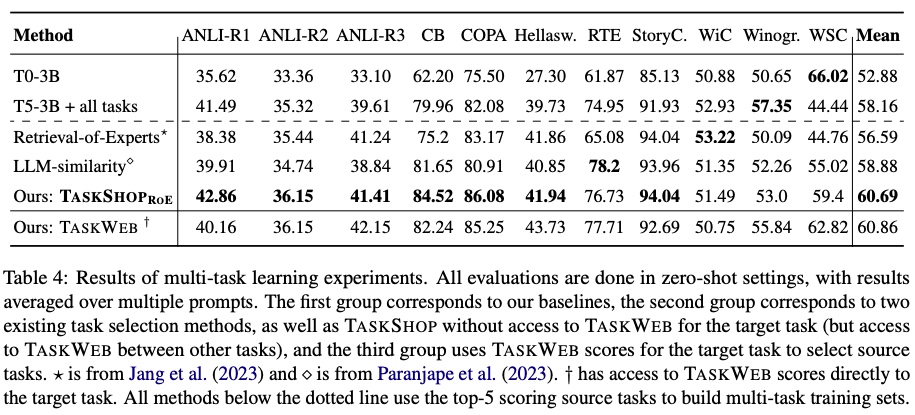

Our method improves overall rankings and top-k precision of source tasks by 12% and 29%, respectively. We also use TaskShop to build much smaller multi-task training sets that improve zero-shot performances across 11 different target tasks by at least 4.3%.

TaskWeb consists of pairwise transfer scores for 22 NLP tasks spanning three different model types (T5, GPT-2, RoBERTa), sizes (60M-770M) and adaptation methods (finetuning, Adapter-tuning, BitFit).

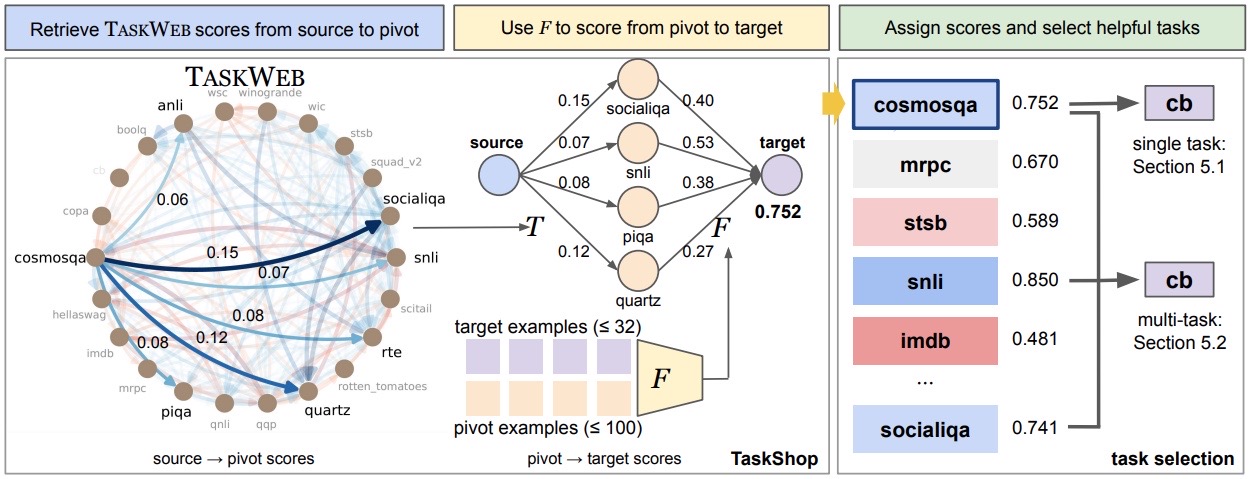

Based on our analysis of TaskWeb, we propose a new method TaskShop for estimating the transferability from a source task in TaskWeb to a target task with a small number of examples. As shown in the main figure, it combines pairwise transfer scores from the source task to a "pivot" task also in TaskWeb and the estimated transfer from the pivot task to the target task using an existing method that only requires task examples. This process is repeated and averaged over all pivot tasks found in TaskWeb, resulting in an estimated transfer score from the source to the target. Further details are given in our paper.

TaskShop returns better overall rankings and identifies more helpful top-k tasks than existing methods.

Building smaller multi-task sets with TaskShop improves target performance over other models trained on larger sets of tasks.

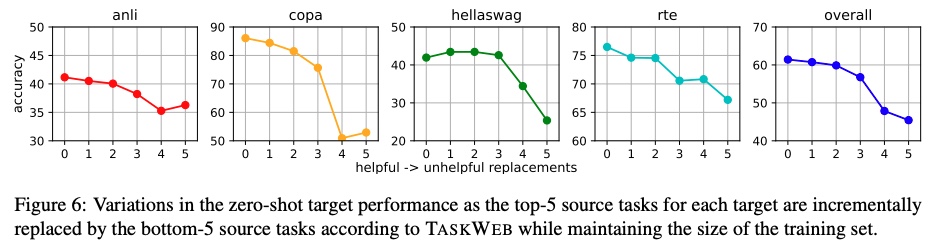

Target performance decreases as multi-task training sets of helpful tasks are replaced with unhelpful tasks per TaskWeb.

This website will continue to be updated with contents from the paper.

@misc{kim2023taskweb,

title={TaskWeb: Selecting Better Source Tasks for Multi-task NLP},

author={Joongwon Kim and Akari Asai and Gabriel Ilharco and Hannaneh Hajishirzi},

year={2023},

eprint={2305.13256},

archivePrefix={arXiv},

primaryClass={cs.CL}

}